regularization machine learning meaning

At the point w these competing objectives reach an. A Gentle Introduction to Applied Machine Learning as a Search Problem.

Introduction To Regularization Methods In Deep Learning By John Kaller Unpackai Medium

32 Regularization Algorithms In the inverse problems literature many algorithms are known besides Tikhonov regularization.

. A Machine Learning interview calls for a rigorous interview process where the candidates are judged on various aspects such as technical and programming skills knowledge of methods and clarity of basic concepts. Welcome to the second stepping stone of Supervised Machine Learning. If you see an ROC curve like this it likely indicates theres a bug in your data.

Shallow trees can together make a more accurate predictor. Leading to the area of manifold learning and manifold regularization. Sparse coding algorithms attempt to do so under the constraint that the learned representation is sparse meaning that the mathematical model has many zeros.

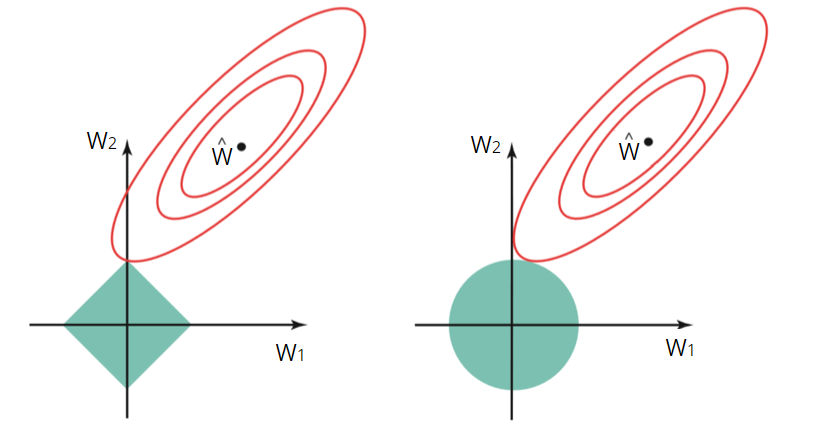

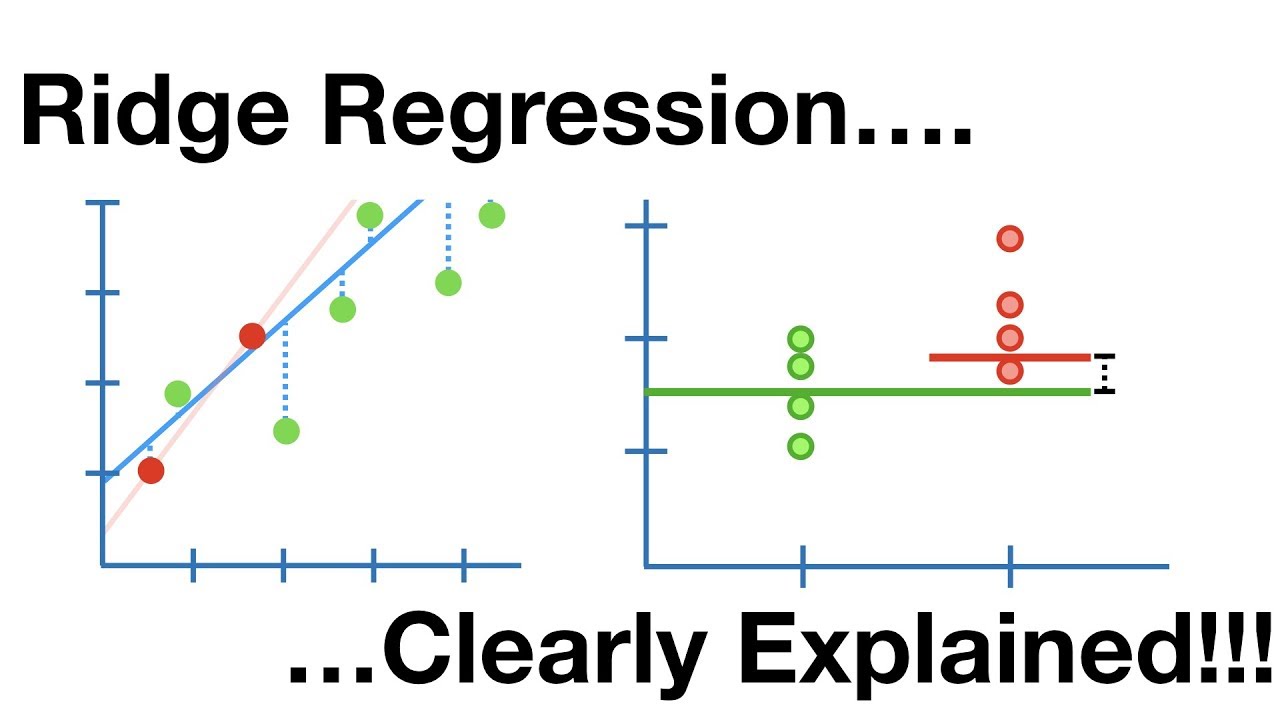

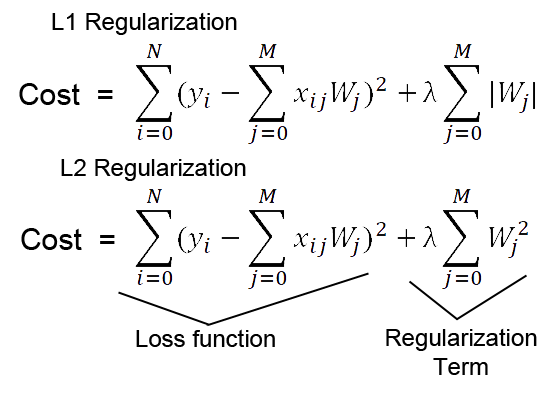

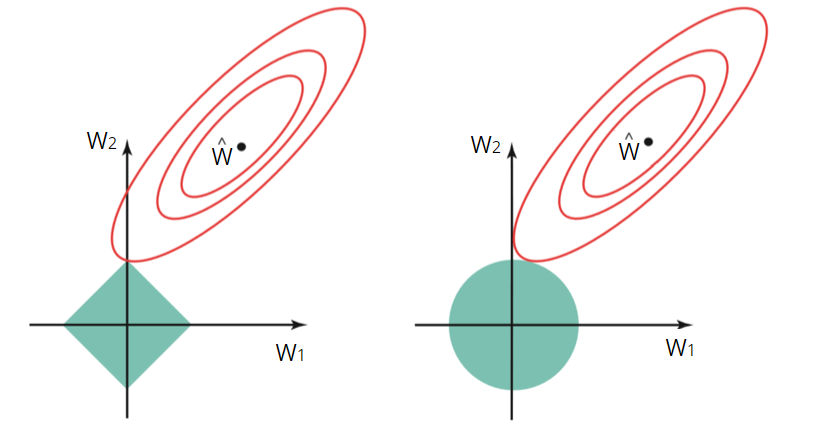

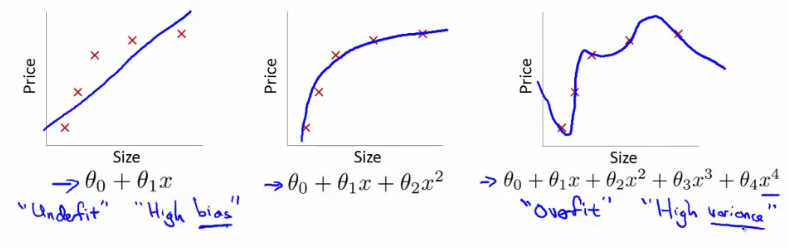

Machine Learning models have the capability to learn from the data we provide resulting in continuous improvement. An illustration of the effect of L2 or weight decay regularization on the value of the optimal w. Again this chapter is divided into two parts.

15 orF Tikhonov Regularization G 1 n. Dropout is a technique where randomly selected neurons are ignored during training. Meaning G KY Xn i1 G ihq iYiq i.

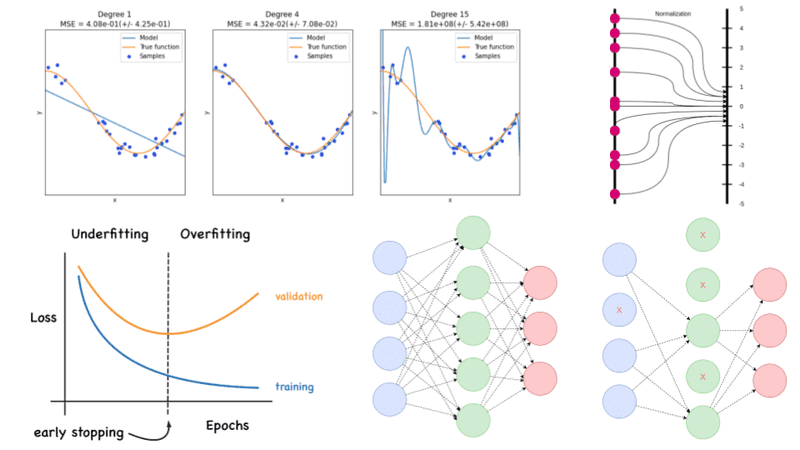

Dropout is a regularization technique for neural network models proposed by Srivastava et al. Each algorithm is de ned by a suitable lter G. Optimization is the core of all machine learning algorithms.

If you aspire to apply for machine learning jobs it is crucial to know what kind of Machine Learning interview questions generally recruiters and. Take the following simple NLP problem. In their 2014 paper Dropout.

W Figure 71. Machine learning ML. Available for free as a PDF An Introduction to Statistical Learning James Witten Hastie and.

The corresponding model actually performs worse than random guessing. Multilinear subspace learning algorithms aim to learn low-dimensional representations directly from. Discover what Optimization is.

Gradient Boosting is a machine learning algorithm used for both classification and regression problems. The solid ellipses represent contours of equal value of the unregularized objective. Dropout Regularization For Neural Networks.

No human intervention is necessary as the decision-making tasks are automated with the help of these models. This ROC curve has an AUC between 0 and 05 meaning it ranks a random positive example higher than a random negative example less than 50 of the time. It works on the principle that many weak learners eg.

Part 1 this one discusses about theory working and tuning parameters. The dotted circles represent contours of equal value of the L2 regularizer. Your training sequences probably include.

They are not necessarily based on penalized empirical risk. Theres an easier version of this book that covers many of the same topics described below. A Simple Way to Prevent Neural Networks from Overfitting download the PDF.

For example the sequence the cat ___ may be followed by sleeps enjoys or wants. REGULARIZATION FOR DEEP LEARNING w 1 w 2 w. This will be our main textbook for L1 and L2 regularization trees bagging random forests and boosting.

Its written by three statisticians who invented many of the techniques discussed. You can get familiar with optimization for machine learning in 3 steps fast. Say you want to predict a word in a sequence given its preceding words.

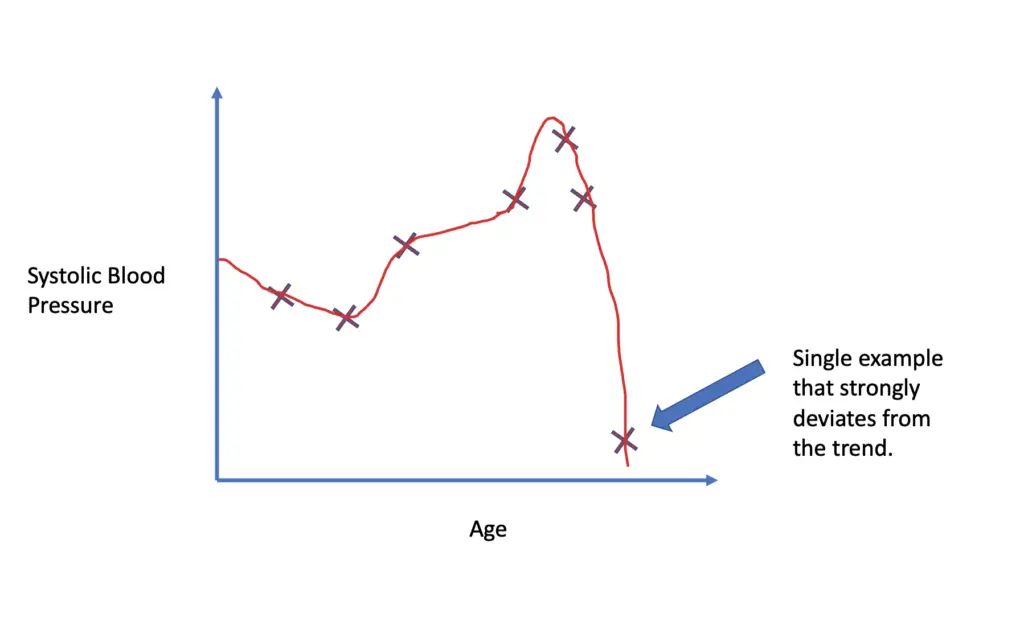

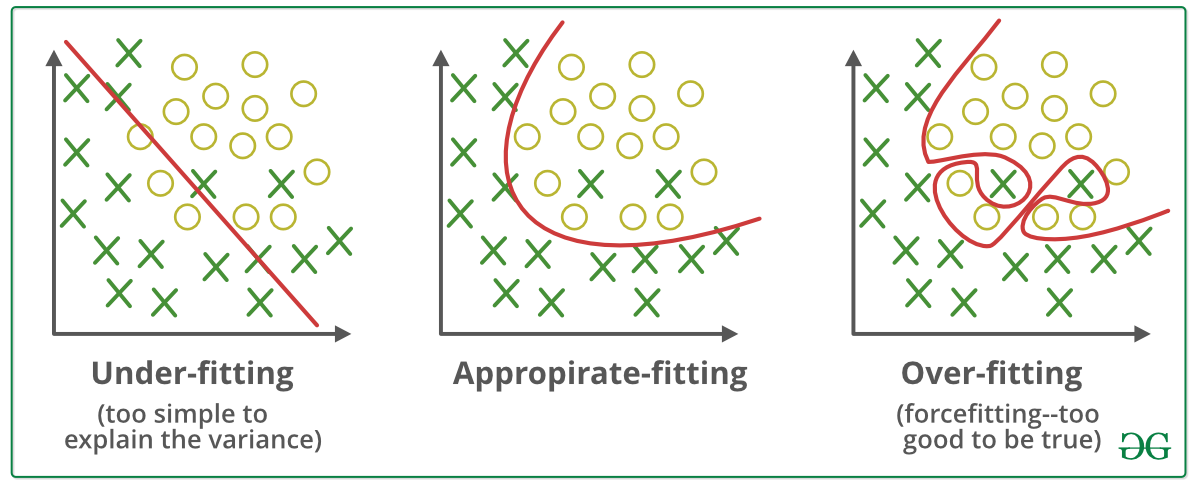

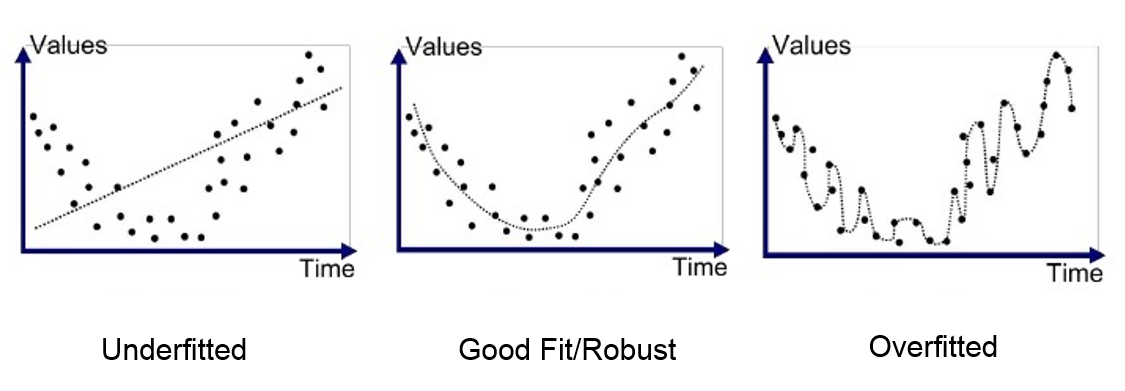

This class of algorithms performs spctreal gularizationer. When we train a machine learning model it is doing optimization with the given dataset. The ultimate goal of machine learning is to find statistical patterns in a training set that generalize to data outside the training set.

Machine Learning helps in easily identifying trends and patterns of customers in purchasing a companys product.

Regularization In Machine Learning Regularization In Java Edureka

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Regularization Techniques For Training Deep Neural Networks Ai Summer

Regularization In Machine Learning

Regularization Part 1 Ridge L2 Regression Youtube

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Regularization In Machine Learning Geeksforgeeks

Regularization Techniques In Deep Learning Kaggle

Regularization Techniques In Deep Learning Kaggle

A Simple Explanation Of Regularization In Machine Learning Nintyzeros

L1 Vs L2 Regularization The Intuitive Difference By Dhaval Taunk Analytics Vidhya Medium

What Is Regularization In Machine Learning

Lasso Vs Ridge Vs Elastic Net Ml Geeksforgeeks

Regularization And Over Fitting An Intuitive And Easy Explanation Of An By Kshitiz Sirohi Towards Data Science

Regularization By Early Stopping Geeksforgeeks

What Is Regularization In Machine Learning Quora

Regularization In Deep Learning L1 L2 And Dropout Towards Data Science

What Is Lasso Regression Definition Examples And Techniques

What Is Machine Learning Regularization For Dummies By Rohit Madan Analytics Vidhya Medium